Science gets a lot of respect these days. Unfortunately, it’s also getting a lot of competition from misinformation. Seven in 10 Americans think the benefits from science outweigh the harms, and nine in 10 think science and technology will create more opportunities for future generations. Scientists have made dramatic progress in understanding the universe and the mechanisms of biology, and advances in computation benefit all fields of science.

On the other hand, Americans are surrounded by a rising tide of misinformation and fake science. Take climate change. Scientists are in almost complete agreement that people are the primary cause of global warming. Yet polls show that a third of the public disagrees with this conclusion.

In my 30 years of studying and promoting scientific literacy, I’ve found that college educated adults have large holes in their basic science knowledge and they’re disconcertingly susceptible to superstition and beliefs that aren’t based on any evidence. One way to counter this is to make it easier for people to detect pseudoscience online. To this end, my lab at the University of Arizona has developed an artificial intelligence-based pseudoscience detector that we plan to freely release as a web browser extension and smart phone app.

Americans’ predilection for fake science

Americans are prone to superstition and paranormal beliefs. An annual survey done by sociologists at Chapman University finds that more than half believe in spirits and the existence of ancient civilizations like Atlantis, and more than a third think that aliens have visited the Earth in the past or are visiting now. Over 75% hold multiple paranormal beliefs. The survey shows that these numbers have increased in recent years.

Widespread belief in astrology is a pet peeve of my colleagues in astronomy. It’s long had a foothold in the popular culture through horoscopes in newspapers and magazines but currently it’s booming. Belief is strong even among the most educated. My surveys of college undergraduates show that three-quarters of them think that astrology is very or “sort of” scientific and only half of science majors recognize it as not at all scientific.

Allan Mazur, a sociologist at Syracuse University, has delved into the nature of irrational belief systems, their cultural roots, and their political impact. Conspiracy theories are, by definition, resistant to evidence or data that might prove them false. Some are at least amusing. Adherents of the flat Earth theory turn back the clock on two millennia of scientific progress. Interest in this bizarre idea has surged in the past five years, spurred by social media influencers and the echo chamber nature of web sites like Reddit. As with climate change denial, many come to this belief through YouTube videos.

However, the consequences of fake science are no laughing matter. In matters of health and climate change, misinformation can be a matter of life and death. Over a 90-day period spanning December, January and February, people liked, shared and commented on posts from sites containing false or misleading information about COVID-19 142 times more than they did information from the Centers for Disease Control and the World Health Organization.

Combating fake science is an urgent priority. In a world that’s increasingly dependent on science and technology, civic society can only function when the electorate is well informed.

Educators must roll up their sleeves and do a better job of teaching critical thinking to young people. However, the problem goes beyond the classroom. The internet is the first source of science information for 80% of people ages 18 to 24.

One study found that a majority of a random sample of 200 YouTube videos on climate change denied that humans were responsible or claimed that it was a conspiracy. The videos peddling conspiracy theories got the most views. Another study found that a quarter of all tweets on climate were generated by bots and they preferentially amplified messages from climate change deniers.

Technology to the rescue?

The recent success of machine learning and AI in detecting fake news points the way to detecting fake science online. The key is neural net technology. Neural nets are loosely modeled on the human brain. They consist of many interconnected computer processors that identify meaningful patterns in data like words and images. Neural nets already permeate everyday life, particularly in natural language processing systems like Amazon’s Alexa and Google’s language translation capability.

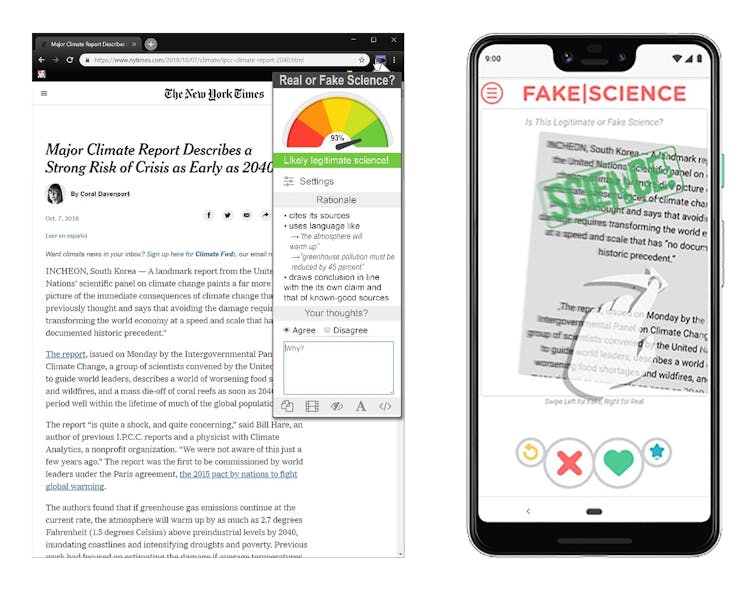

At the University of Arizona, we have trained neural nets on handpicked popular articles about climate change and biological evolution, and the neural nets are 90% successful in distinguishing wheat from chaff. With a quick scan of a site, our neural net can tell if its content is scientifically sound or climate-denial junk. After more refinement and testing we hope to have neural nets that can work across all domains of science.

Chris Impey, CC BY-ND

The goal is a web browser extension that would detect when the user is looking at science content and deduce whether or not it’s real or fake. If it’s misinformation, the tool will suggest a reliable web site on that topic. My colleagues and I also plan to gamify the interface with a smart phone app that will let people compete with their friends and relatives to detect fake science. Data from the best of these participants will be used to help train the neural net.

Sniffing out fake science should be easier than sniffing out fake news in general, because subjective opinion plays a minimal role in legitimate science, which is characterized by evidence, logic and verification. Experts can readily distinguish legitimate science from conspiracy theories and arguments motivated by ideology, which means machine learning systems can be trained to, as well.

“Everyone is entitled to his own opinion, but not his own facts.” These words of Daniel Patrick Moynihan, advisor to four presidents, could be the mantra for those trying to keep science from being drowned by misinformation.

Chris Impey, University of Arizona

Chris Impey, University Distinguished Professor of Astronomy, University of Arizona

This article is republished from The Conversation under a Creative Commons license. Read the original article. https://creativecommons.org/licenses/by-nd/4.0/